Highest hotlink thrust: a Chain of Thought example

- Mark Loftus

- Feb 12, 2024

- 2 min read

Let’s think this through as an anagram?

Chain of Thought prompting

In a recent post I reviewed my learning from having written many, many prompts and prompt chains for Text Alchemy. One of my comments referred to Chain of Thought prompting, which provides a way of scripting LLMs to give qualitatively different responses to the usual generic responses that are provided to ‘naive’ or unstructured prompts.

People immersed in this field will know that there’s considerable sophistication possible with Chain of Thought prompting (I found this post a really helpful guide to the likely direction CoT could develop into — through Tree of Thought prompting and further into more abstracted Graph of Thought and Algorithm of Thought prompting).

But one frequent question in response to my post was to ask for examples of the difference that CoT prompting makes. I struggled to find crisp, clear examples until I stumbled across Unlikely.ai and their founder, William Tunstall-Pedoe (best known for his role in the development of Amazon Alexa).

Many years ago he created an anagram-maker, which has the dubious distinction of having been used to create the anagrams in Dan Brown’s best-seller The Da Vinci Code (takes me back!). Most recently Unlikely.ai have released a cryptic crossword solver. Solving anagrams and cryptic crosswords are both interesting computational challenges.

Anagrams & ChatGPT

So I thought I’d see how ChatGPT could handle anagrams.

Simple answer: not well.

Input-Output prompt

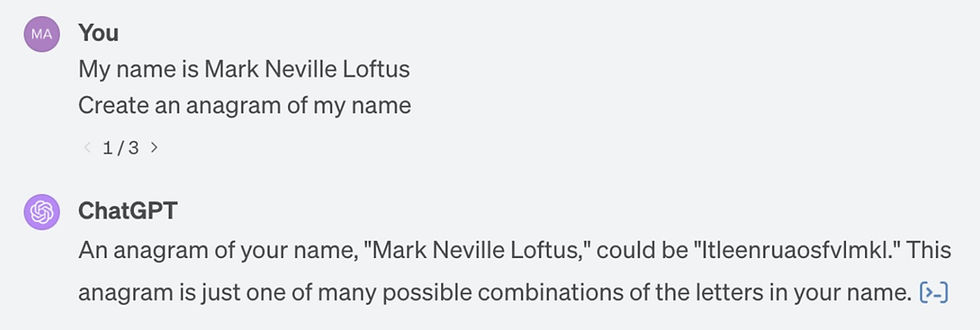

Here’s the naive Input-Output prompt, using my name:

Chain of Thought prompt

And here’s the start of response with the simple addition of ‘Let’s think this through step by step’:

As the prompt continues, we see evidence of ChatGPT reasoning about the task its been set, and about its own limitations in relation to this task:

And finally it concludes that it can’t generate an anagram of my name.

Should we leave it there?

Chain of Thoughts With Self-Consistency

How about if we use the next level of CoT prompting: Chain of Thoughts with Self-Consistency.

This involves requesting the LLM to construct multiple chains of thought, evaluate each one and select what it predicts to be the most effective and coherent chain.

Here’s my prompt:

And ChatGPT outlines the three approaches and gives potential applications and limitations for each. For brevity, I’ll just record the headline approaches:

### 1. Brute Force Method

### 2. Pattern Matching and Dictionary Lookup

### 3. Probabilistic and Machine Learning Models

A natural next step is to ask ChatGPT to evaluate which approach is likely to work best given what it knows about itself:

And then? Another Chain of Thought prompt:

And again, for brevity, I’ll just record the headline stages:

### 1. Initial Analysis and Preparation

### 2. Dictionary Lookup for Base Words

### 3. Pattern Matching for Word Formation

### 4. Iterative Refinement

### 5. Evaluation and Finalization

### 6. Presentation

Festival Mourn Kell

And finally…

Maybe not perfect, but certainly progress from “Itleenruaosfvlmkl”

This is really insightful Mark! Seeing examples like this really puts things into perspective and it's something I'll certainly be utilising in my prompt writing :)